微调大语言模型(LLM)

在自然语言处理(NLP)领域,大语言模型(LLM)通过在海量文本数据上预训练,已经学会了丰富的语言知识和模式。微调这些模型可以使其适应特定的应用场景,提高其在特定任务上的表现。

应用场景

微调LLM的应用场景包括但不限于:

- 领域特定问答系统:针对医疗、法律或金融等领域的问答机器人

- 文本分类:如情感分析、主题分类、垃圾邮件检测等

- 文本生成:定制化的文本生成,如撰写新闻文章、生成技术文档等

- 机器翻译:针对特定语言对或领域术语的翻译模型

- 摘要生成:自动生成文档、文章或报告的摘要

微调LLM

数据准备

数据准备是微调过程中的关键步骤,包括:

-

数据收集:收集与应用场景相关的文本数据。这些数据可以从网页、电子书籍、对话文本、多语数据、科学数据和代码段中提取。

-

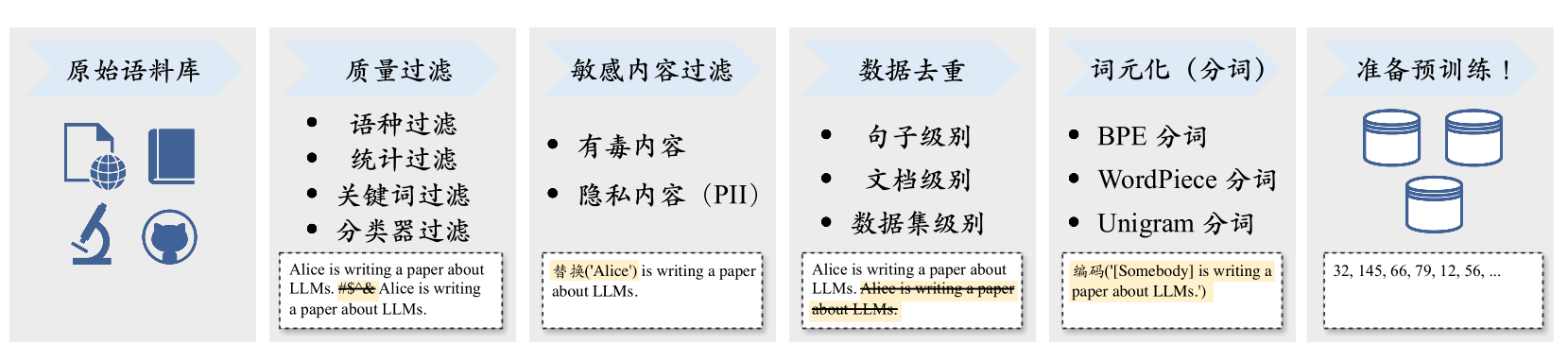

数据清洗:去除无关信息,标准化文本格式。一般会对数据做质量过滤、敏感内容过滤以及数据去重等操作。

- 数据标注:对于有监督学习,还需要对文本数据进行正确的标注。标注后的文本数据示例如下:

[

"User:Virgin Australia, the trading name of Virgin Australia Airlines Pty Ltd, is an Australian-based airline. It is the largest airline by fleet size to use the Virgin brand. It commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route. It suddenly found itself as a major airline in Australia's domestic market after the collapse of Ansett Australia in September 2001. The airline has since grown to directly serve 32 cities in Australia, from hubs in Brisbane, Melbourne and Sydney.When did Virgin Australia start operating?</s>\n Assistant:Virgin Australia commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route.</s>\n ",

"User:Which is a species of fish? Tope or Rope</s>\n Assistant:Tope</s>\n ",

"User:Why can camels survive for long without water?</s>\n Assistant:Camels use the fat in their humps to keep them filled with energy and hydration for long periods of time.</s>\n ",

"User:Alice's parents have three daughters: Amy, Jessy, and what’s the name of the third daughter?</s>\n Assistant:The name of the third daughter is Alice</s>\n ",

"User:Komorida was born in Kumamoto Prefecture on July 10, 1981. After graduating from high school, he joined the J1 League club Avispa Fukuoka in 2000. Although he debuted as a midfielder in 2001, he did not play much and the club was relegated to the J2 League at the end of the 2001 season. In 2002, he moved to the J2 club Oita Trinita. He became a regular player as a defensive midfielder and the club won the championship in 2002 and was promoted in 2003. He played many matches until 2005. In September 2005, he moved to the J2 club Montedio Yamagata. In 2006, he moved to the J2 club Vissel Kobe. Although he became a regular player as a defensive midfielder, his gradually was played less during the summer. In 2007, he moved to the Japan Football League club Rosso Kumamoto (later Roasso Kumamoto) based in his local region. He played as a regular player and the club was promoted to J2 in 2008. Although he did not play as much, he still played in many matches. In 2010, he moved to Indonesia and joined Persela Lamongan. In July 2010, he returned to Japan and joined the J2 club Giravanz Kitakyushu. He played often as a defensive midfielder and center back until 2012 when he retired.When was Tomoaki Komorida born?</s>\n Assistant:Tomoaki Komorida was born on July 10,1981.</s>\n "

]

当以上步骤都完成后,就可以将微调数据集通过LM Lab的数据管理模块进行管理:

LM Lab支持的数据源:

| 数据来源 | 使用说明 |

|---|---|

| 本地上传 | 支持本地上传文件和文件夹 |

| 本地路径 | 本地服务器存储路径 |

| FTP | 需要FTP地址、用户名和密码 |

| 对象存储 | 需要对象存储地址、Bucket名称、AccessKey、AccessKeySecret |

| 超链接 | 需要超链接地址 |

| Hugging Face | 需要Hugging Face社区Dataset地址 |

| AI市场 | 从AI市场预置的数据集中进行选择 |

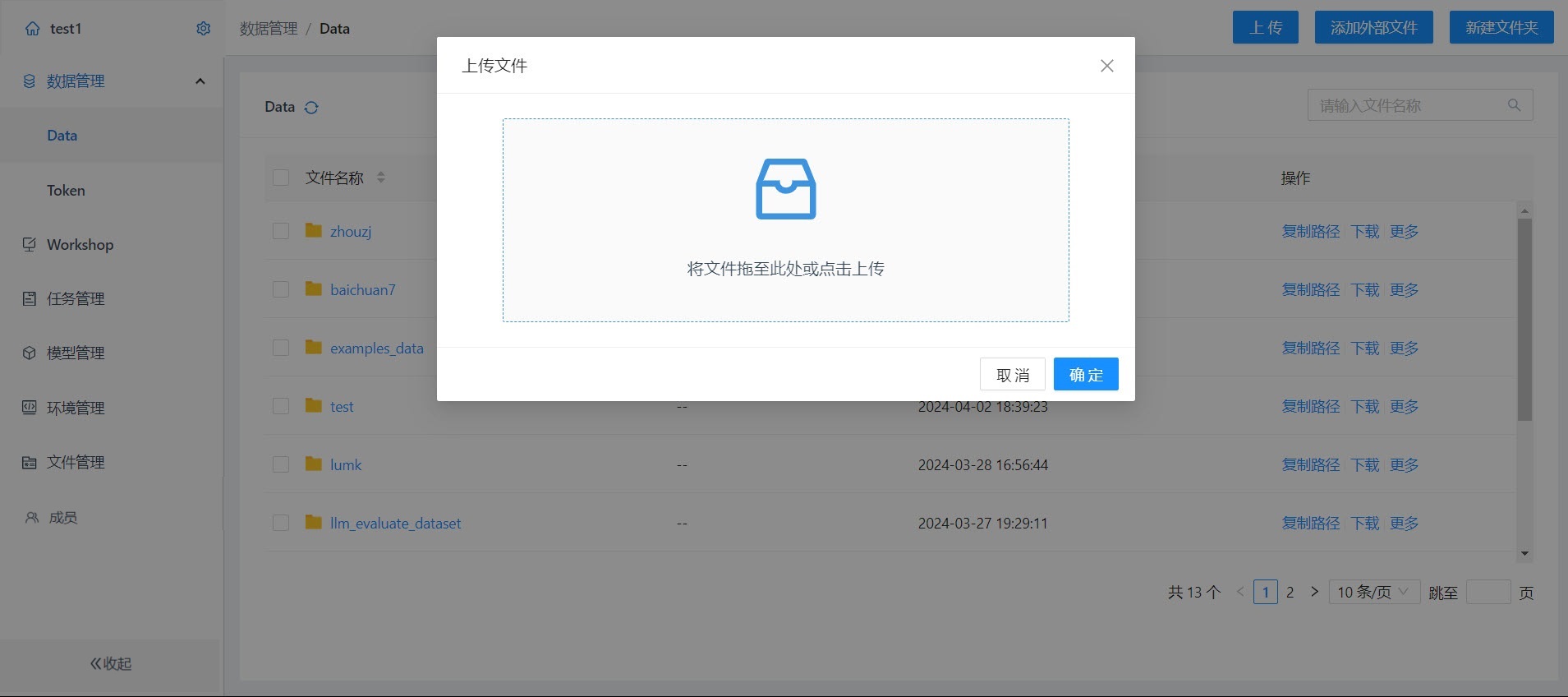

- 在左侧菜单中点击“工具”,选择“LM Lab”并进入,在LM Lab左侧目录中选择数据管理下的数据,进入数据文件列表,点击上传按钮下的上传文件,添加本地数据data.json。

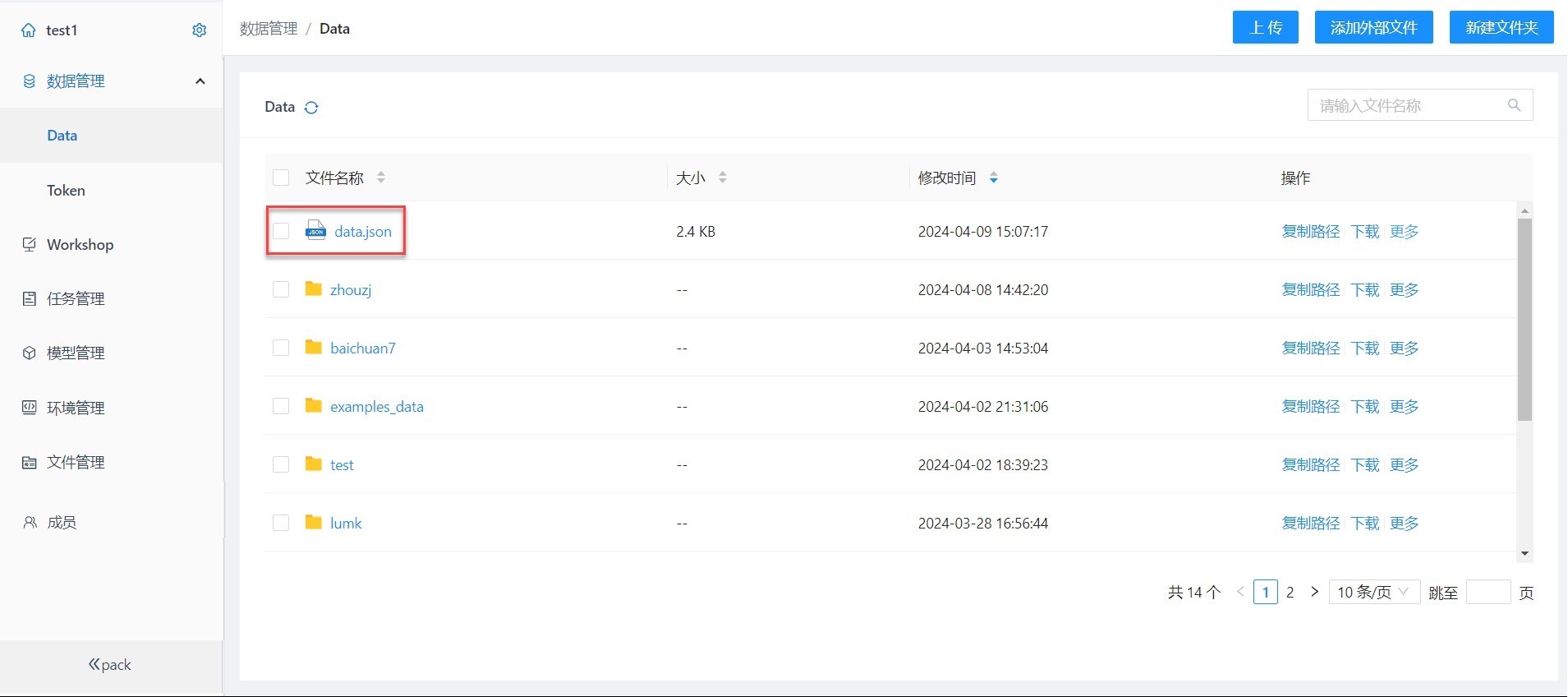

- 上传后在Data列表可以看到data.json数据文件。

其他数据源的数据管理流程与上述描述的过程类似,具体可以参考LM Lab用户手册。

模型选择

选择适合的预训练模型是微调成功的基础:

- 选择合适的架构:如Transformer、BERT、GPT等

- 考虑模型大小:根据计算资源和任务需求选择合适大小的模型

- LM Lab:提供了模型管理模块用于将各种架构、各种规模的大模型收纳起来

LM Lab的AI Market中为用户预置了一些主流大模型,用户可以在模型管理中添加AI Market模型,也可以根据需求添加其他模型,LM Lab为用户提供多种添加模型方式:

参数配置

在LM Lab中,Workshop模块为用户提供自主训练大模型的工作空间,可以做基于基础大语言模型的微调训练任务。

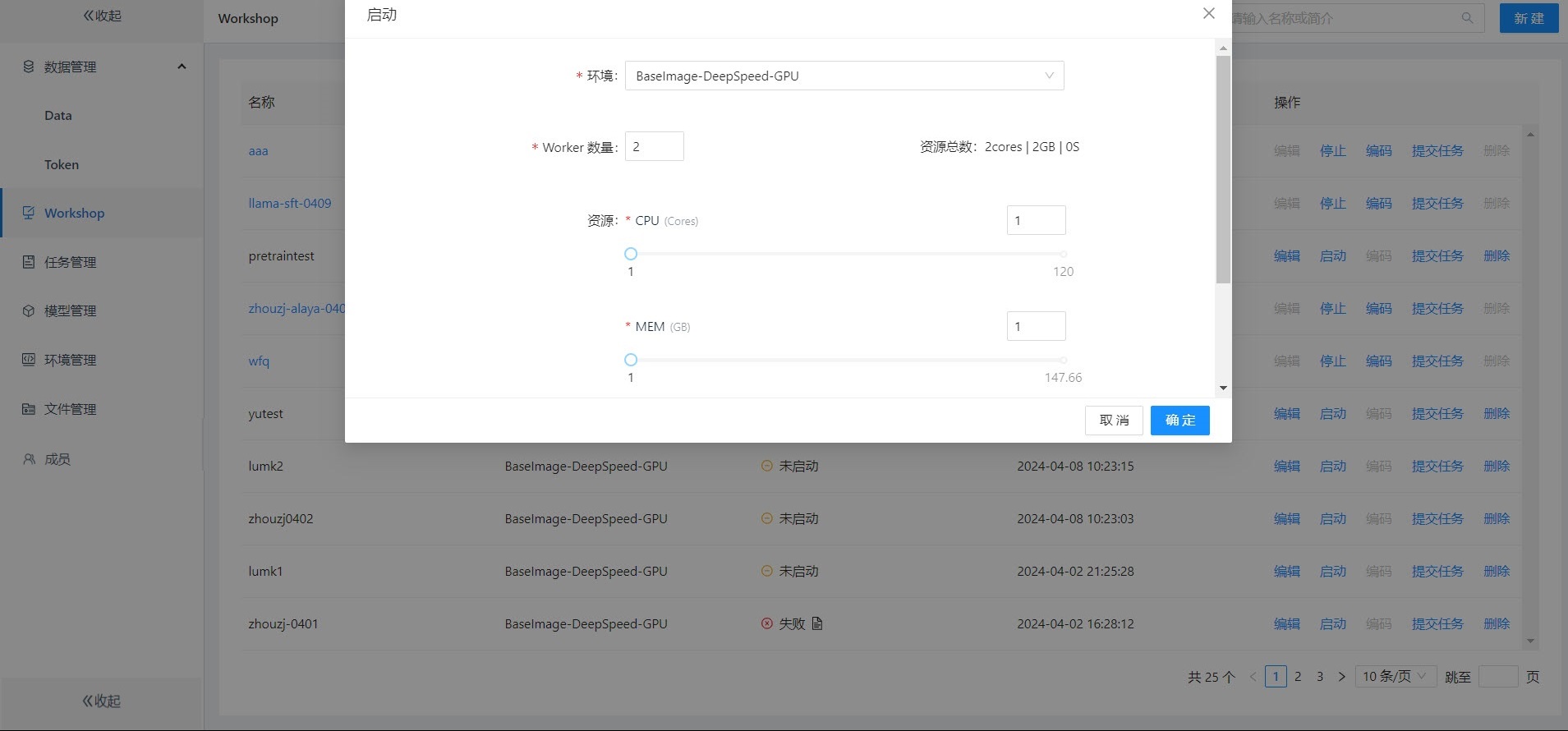

- 新建Workshop后,配置好需要使用的资源:

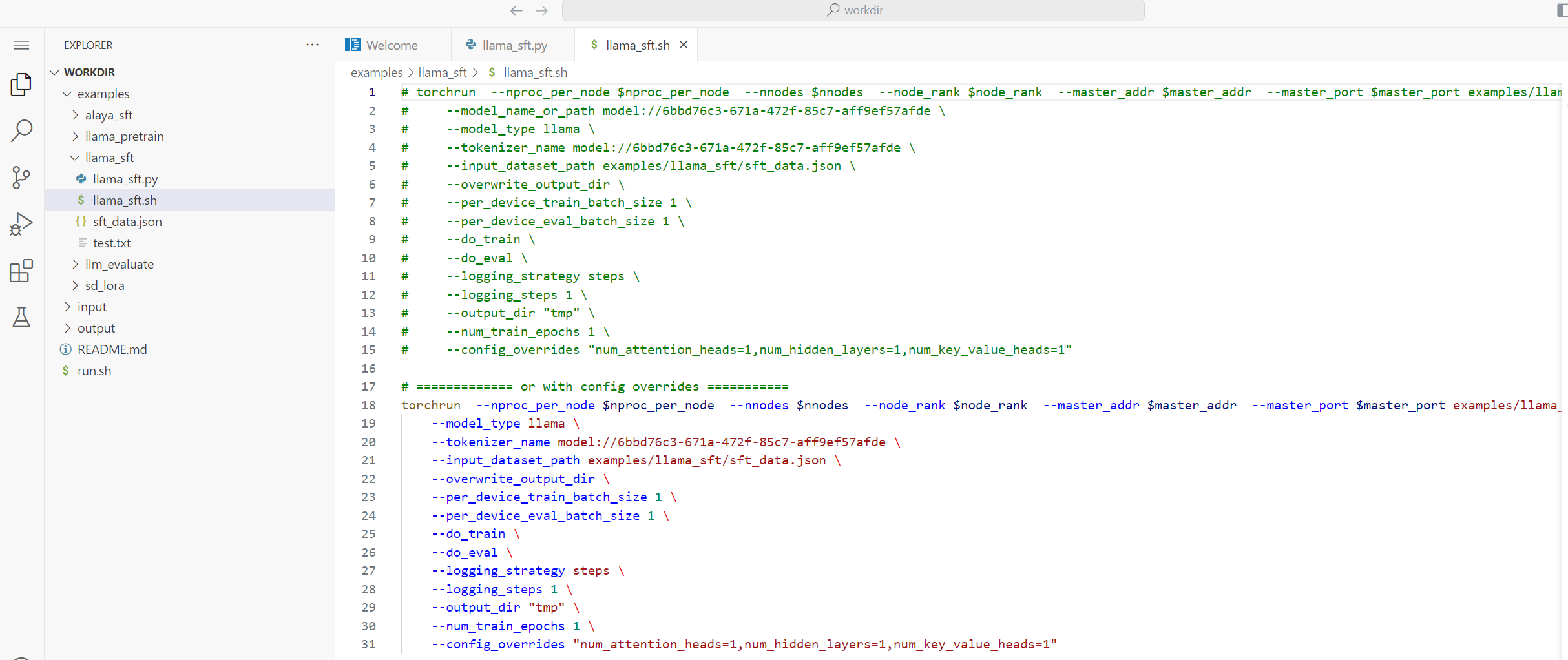

- 启动Workshop就会进入到LM Lab的内部编辑器页面,其中提供了一些示例代码,在examples目录中:

-

用户可以根据需求选择使用预置模型或者采用自定义模型进行大语言模型微调训练。

若用户选择使用预置的llama大语言模型作为基础模型来做模型微调,需要对训练参数做些调整:

- 学习率调整:设置适合微调任务的学习率。如果不知道如何调整,可以使用代码中提供的默认值

- 批量大小:根据内存限制和数据集大小确定批量大小。可以在启动脚本中调整训练批次对应的参数

- 训练轮数:确定模型需要训练的轮数或迭代次数。可以在启动脚本中调整epoch等相关的参数

-

调整完微调训练启动脚本的相关参数后,直接执行启动脚本即可开始训练。

训练成果

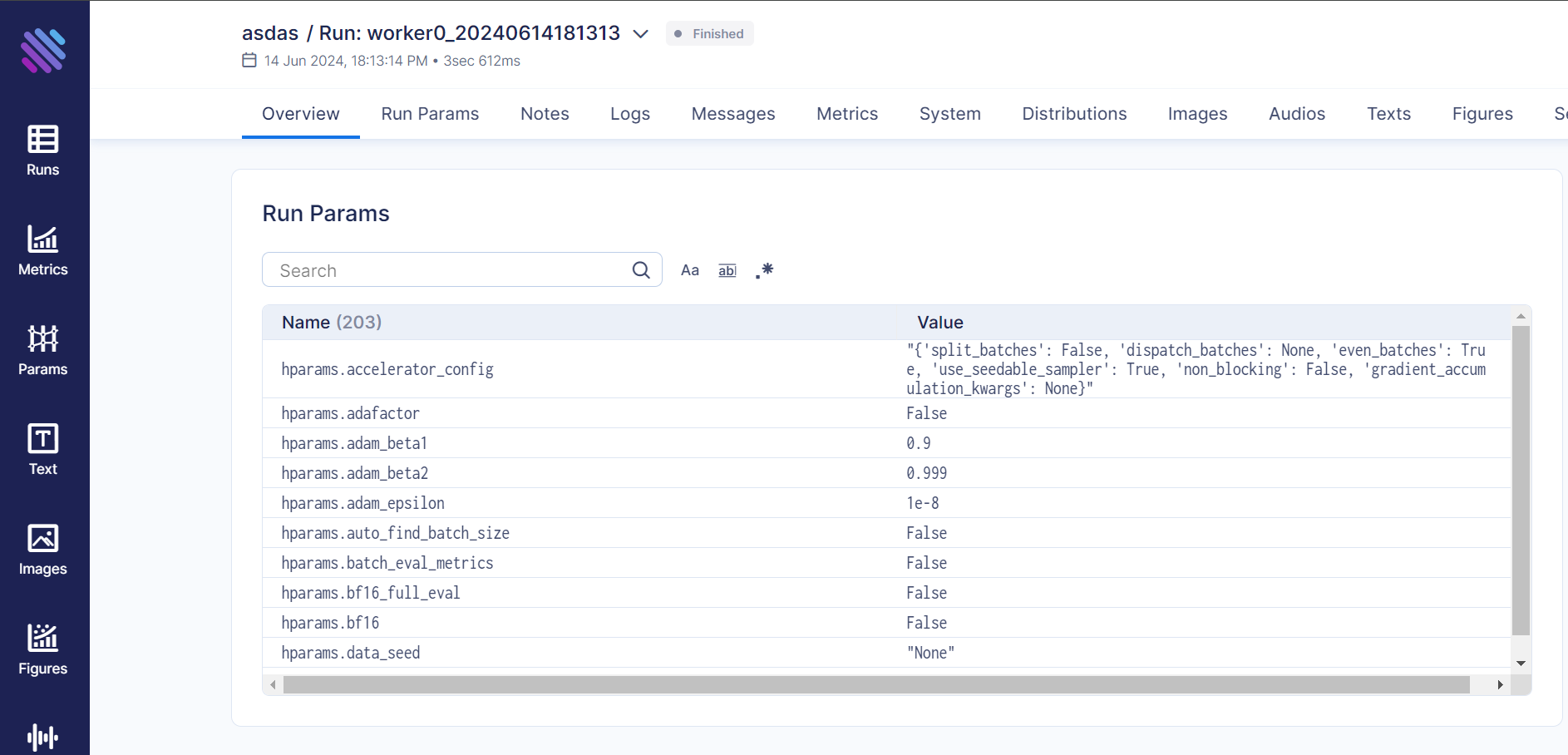

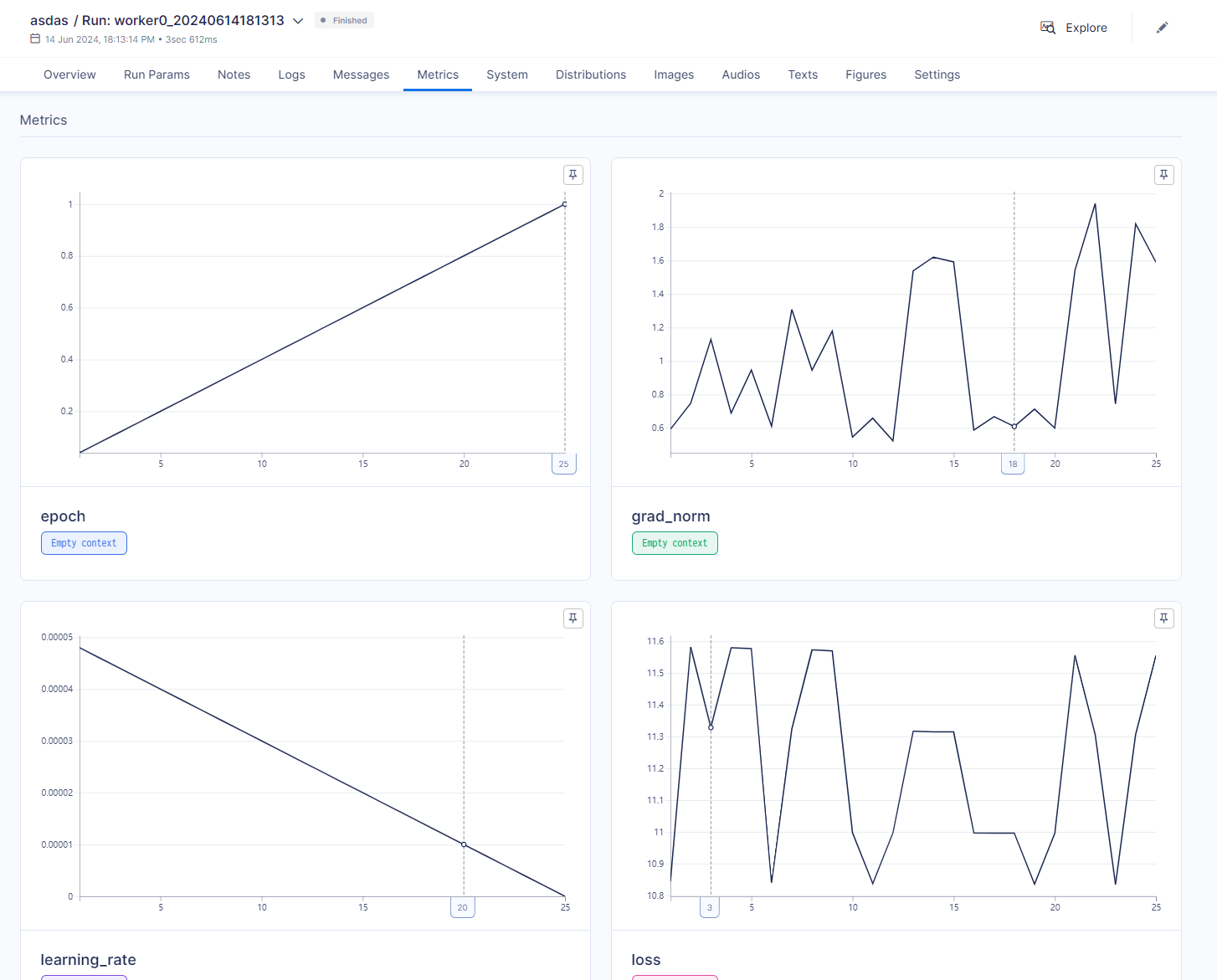

训练过程记录及训练成果可以在LM Lab的任务管理模块的“训练详情”中查看,支持查看超参数、Loss曲线等来监控大语言模型的微调训练情况。

微调大语言模型是一个复杂但充满潜力的过程,正确的方法和策略可以显著提升模型在特定任务上的性能。随着NLP技术的不断进步,微调LLM将在更多领域发挥重要作用。